AI Week lands in FL: Voters are using AI more, trusting it less, and want homegrown regulation

New survey reveals bipartisan consensus on concerns and policy preferences

In just the past couple of years, AI chatbots have gone from novelty to everyday companions. For many, what began with “draw pop art of an elephant dressed as Abraham Lincoln walking on the moon while smoking a cigar!” is now a full-time colleague, mental health therapist, and fact-checker all in one.

The risks have escalated just as quickly. Families in multiple states have sued AI companies, alleging that chatbots encouraged vulnerable teenagers toward self harm or suicide rather than steering them to real help. Researchers have also documented AI systems that, when pushed, readily produce antisemitic slurs, praise for Hitler, or other bigoted content – making the technology feel less like a neutral tool and more like a force that might deepen problems we already have.

Against this backdrop, the Florida Legislature is holding its first official AI Week in Tallahassee. House Speaker Daniel Perez has directed committees to devote their December 8-12 interim meetings to examining artificial intelligence and its impact on the state. Governor Ron DeSantis has proposed an Artificial Intelligence Bill of Rights for Floridians, with provisions on data privacy, parental controls, and limits on exploitative uses of people’s images and voices.

So what do Florida voters actually think about AI?

To answer that, our team at Sachs Media conducted a statewide survey in November and compared it with the results of an identical survey in September 2023. Each polled over 1,000 voters through a random sample of the Florida voter file. The results show a public that is more engaged with AI but more uneasy about where it’s going – and very clear about wanting guardrails.

Adoption is soaring. Enthusiasm is not.

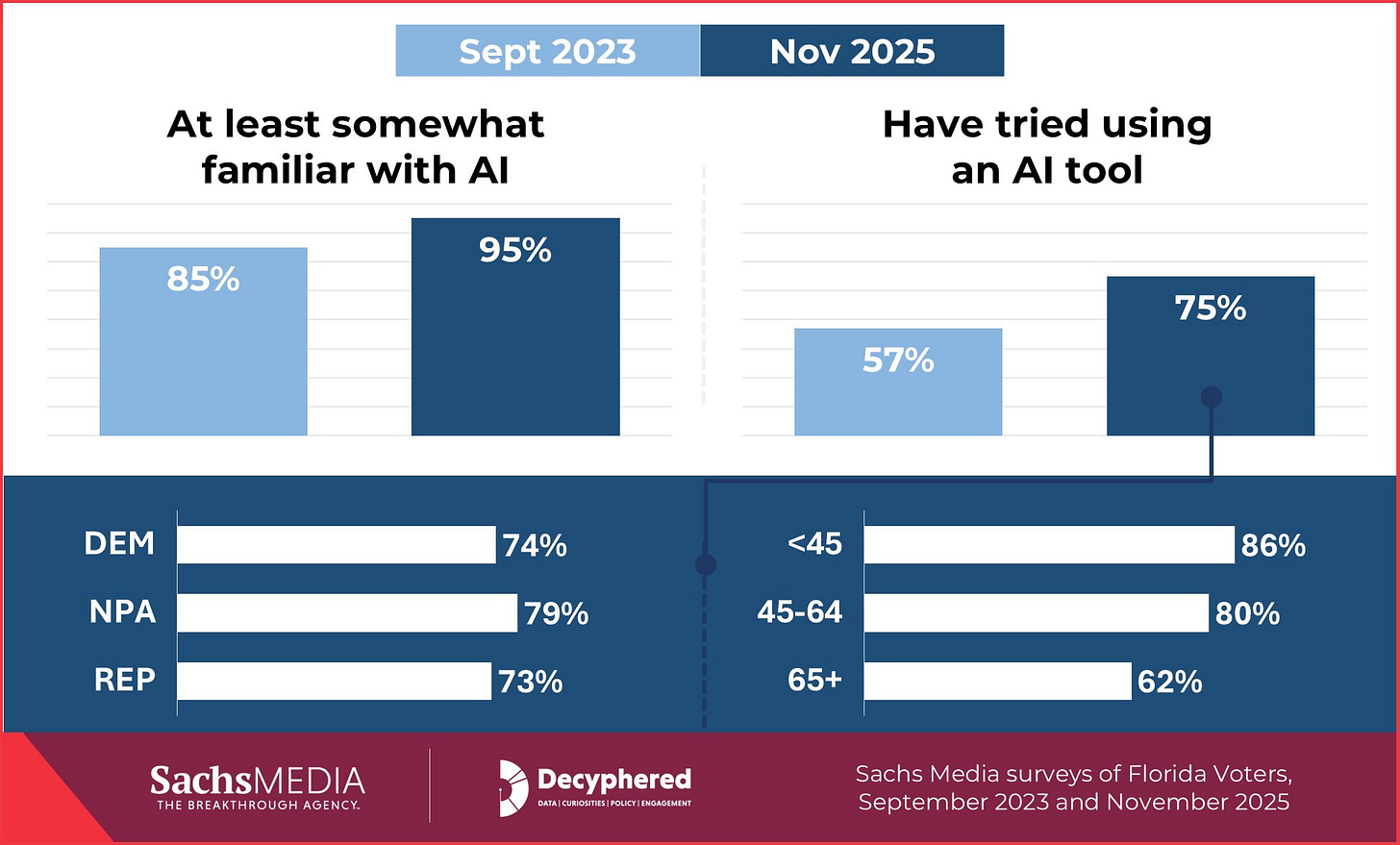

Between 2023 and 2025, Floridians’ contact with AI exploded. Two years ago, 85% said they were at least somewhat familiar with AI; by 2025 that had climbed to 95%. Over the same period, the share who have tried an AI tool rose from 57% to 75%. AI use is broad across party lines: Around three-quarters of Democrats, Republicans, and nonpartisans all now say they have used an AI tool.

Unsurprisingly, younger adults lead the way, with about 86% of those under 45 reporting hands-on experience. But even roughly 62% of seniors have dabbled.

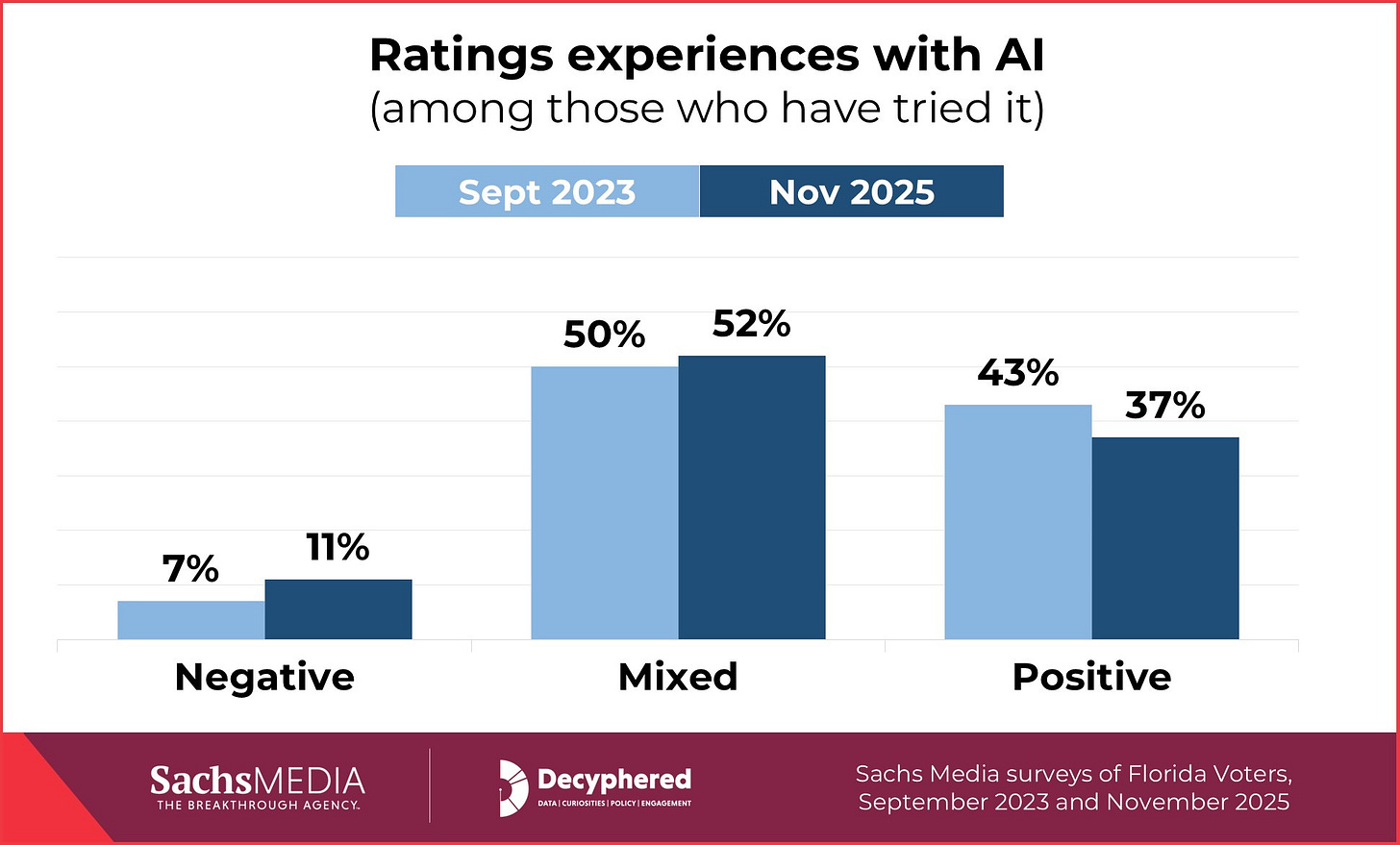

Yet when we look at how those users rate their experiences, optimism has cooled. Positive ratings of AI sagged from 43% in 2023 to 37% in 2025, while negative ratings nearly doubled.

In other words, AI has moved into the mainstream faster than public comfort. Voters still see usefulness and novelty, but in their interactions with AI are colliding with glitches, biased answers, alarming headlines, and new worries about how their data is being used.

This combination of high usage and growing skepticism is exactly when people start looking to policymakers for help.

Florida vs. the feds on regulating AI: Voters want homegrown rules

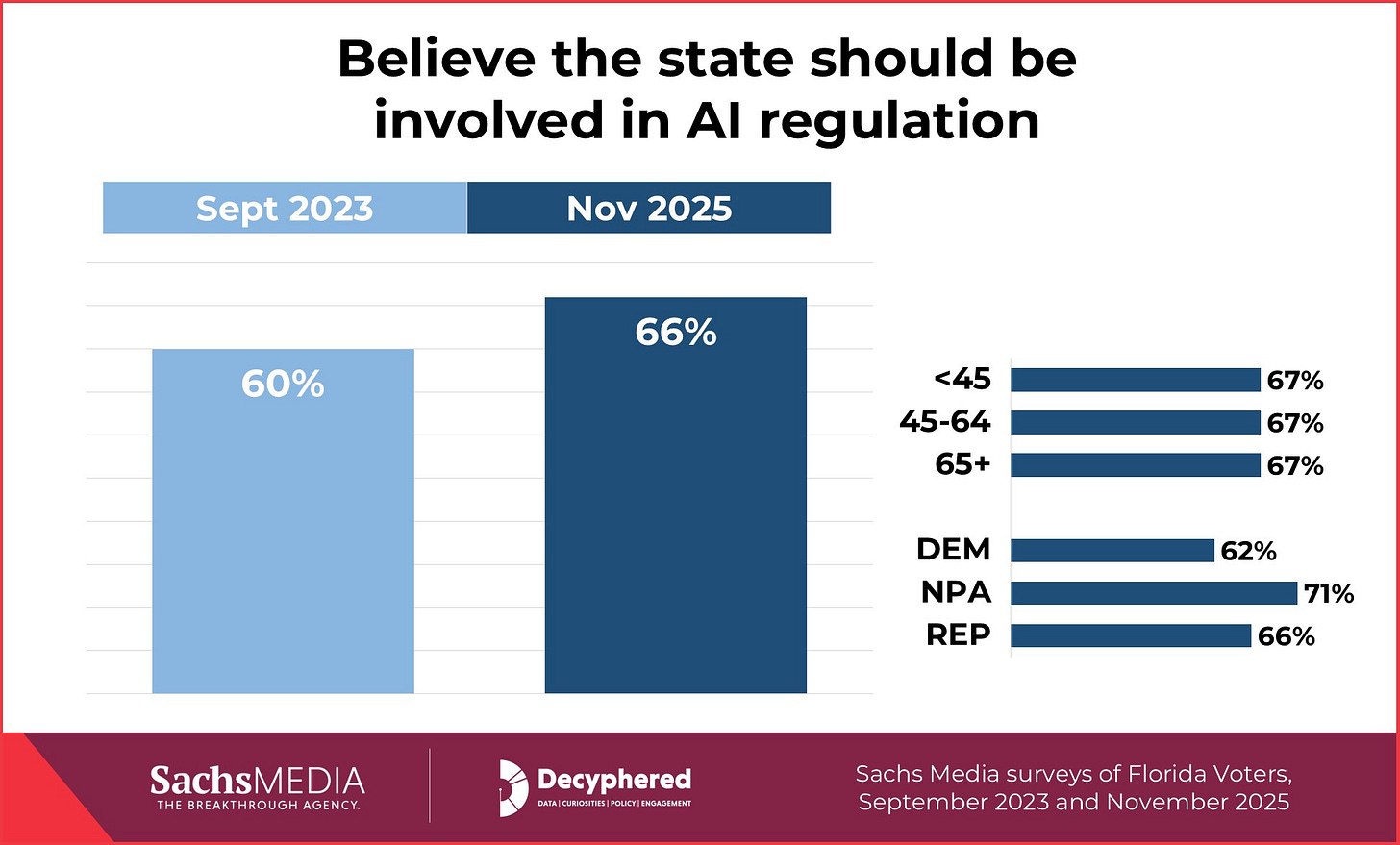

President Trump is soon expected to issue an executive order with a national AI framework, including talk of limiting state-level regulations. But if there is a single clear message from our survey data, it is this: Floridians want AI regulated – including at the state level.

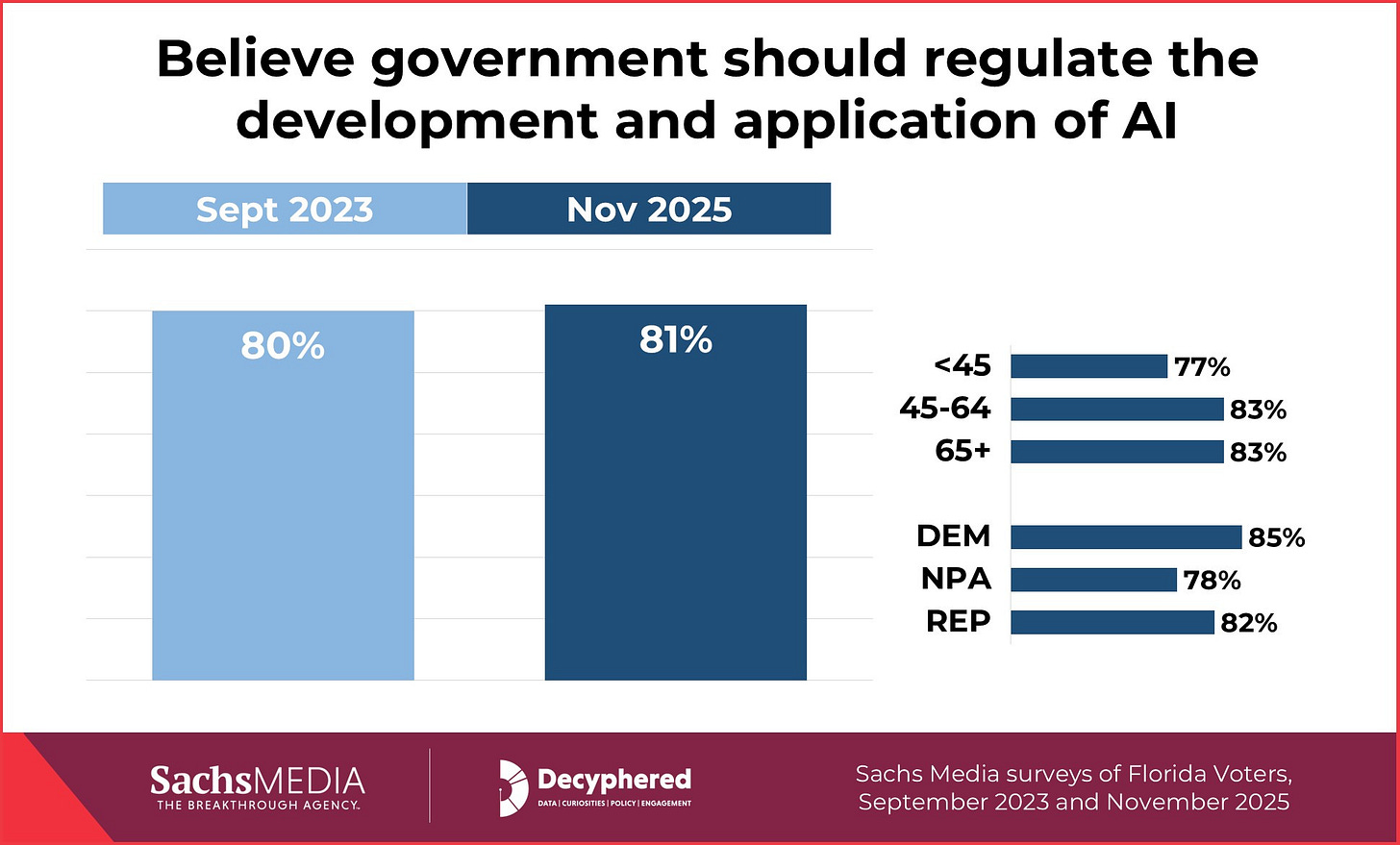

In 2023, 80% said the government should regulate the development and application of AI, a number that remains essentially unchanged at 81% in 2025.

Importantly, the share of Floridians who believe the state government should have a role in AI regulations rose from 60% to 66%. In other words, Florida lawmakers do have a mandate from their constituents to develop a state role in regulating AI. That said, while Florida voters are asking for homegrown rules, the federal government has yet to fully articulate the merits of a federal-only approach that could bring accountability while avoiding a complicated 50-different-rules patchwork.

The shifting drive for government involvement: from national security to personal privacy

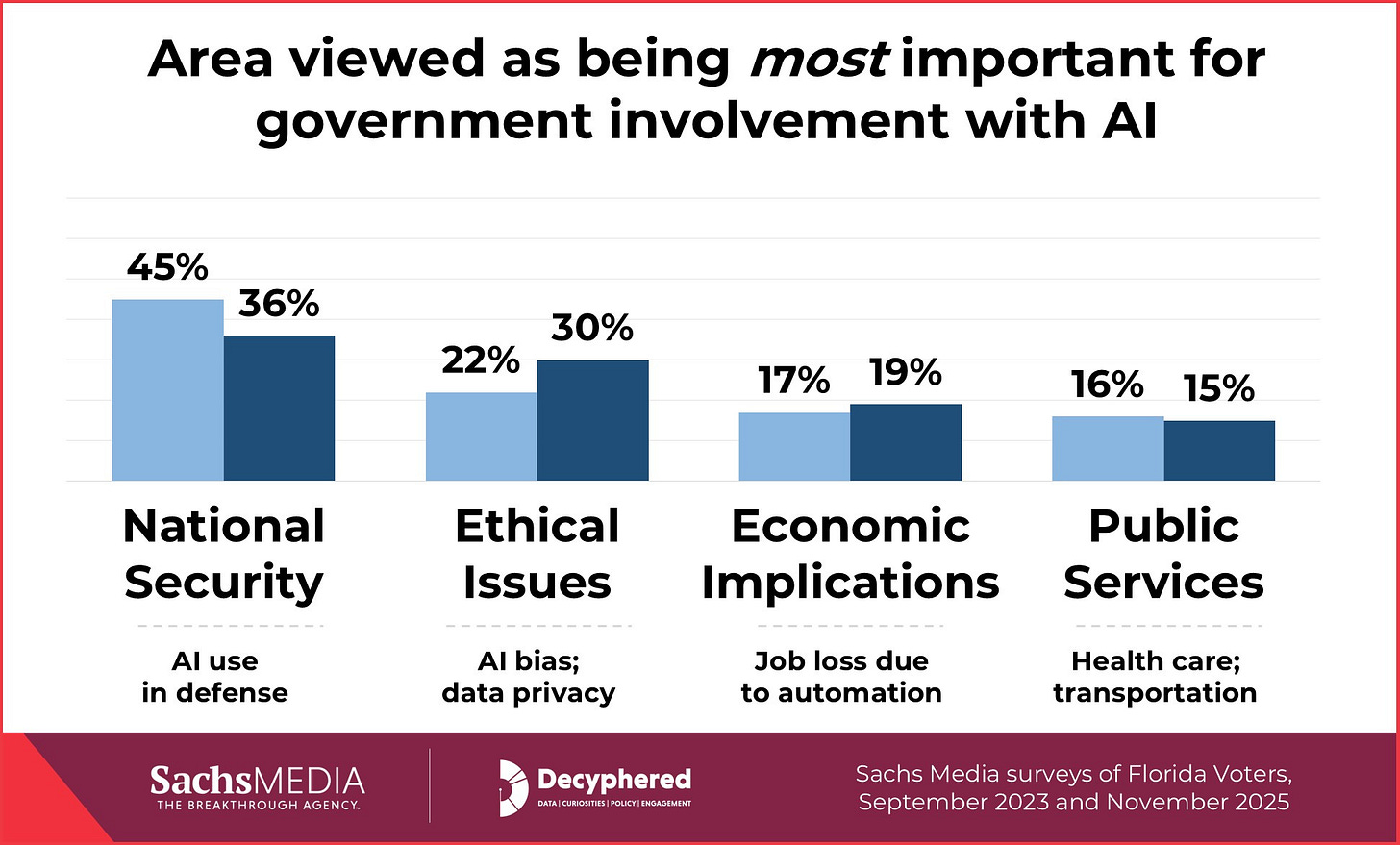

When asked which area is the most important reason for government involvement with AI, voters point to national security as the top concern – although it has fallen from 45% in 2023 to 36% in 2025.

At the same time, ethical issues such as bias and data privacy rose during this time, from 22% to 30%. And economic implications and job loss stayed about steady from 17% to 19%. Finally, the desire for regulation of AI in public services, including health care and transportation, remained in the mid-teens.

This shift in the top priority – from regulating AI in national security toward concerns for personal ethics and privacy – suggests that AI no longer feels like a distant geopolitical tool. Instead, it feels like something that handles people’s private information, shapes the information they see, and may eventually make decisions about their jobs or benefits.

Floridians were already worried about AI and elections in 2023

Before the 2024 election cycle really got under way, we asked Floridians a set of questions about AI and democracy. The answers were striking.

87% believed that malicious actors would use AI tools such as deepfakes to spread misinformation in the 2024 elections. That view was almost perfectly bipartisan: 87% of Democrats, 86% of nonpartisans, and 87% of Republicans. Concern grew with voters’ age, from 79% among adults under 30 to 92% among seniors.

Half of voters said the presence of AI reduces their trust in elections overall.

39% believed AI would actually change the outcomes of at least some races in 2024, again with similar levels across parties.

It’s rare to see that level of cross-party agreement on any election question, much less one involving future-oriented technology. The upcoming 2026 cycle will no doubt bring more clarity to the impacts of AI on campaigns and elections.

Voters still think humans are smarter, but fear the direction of travel

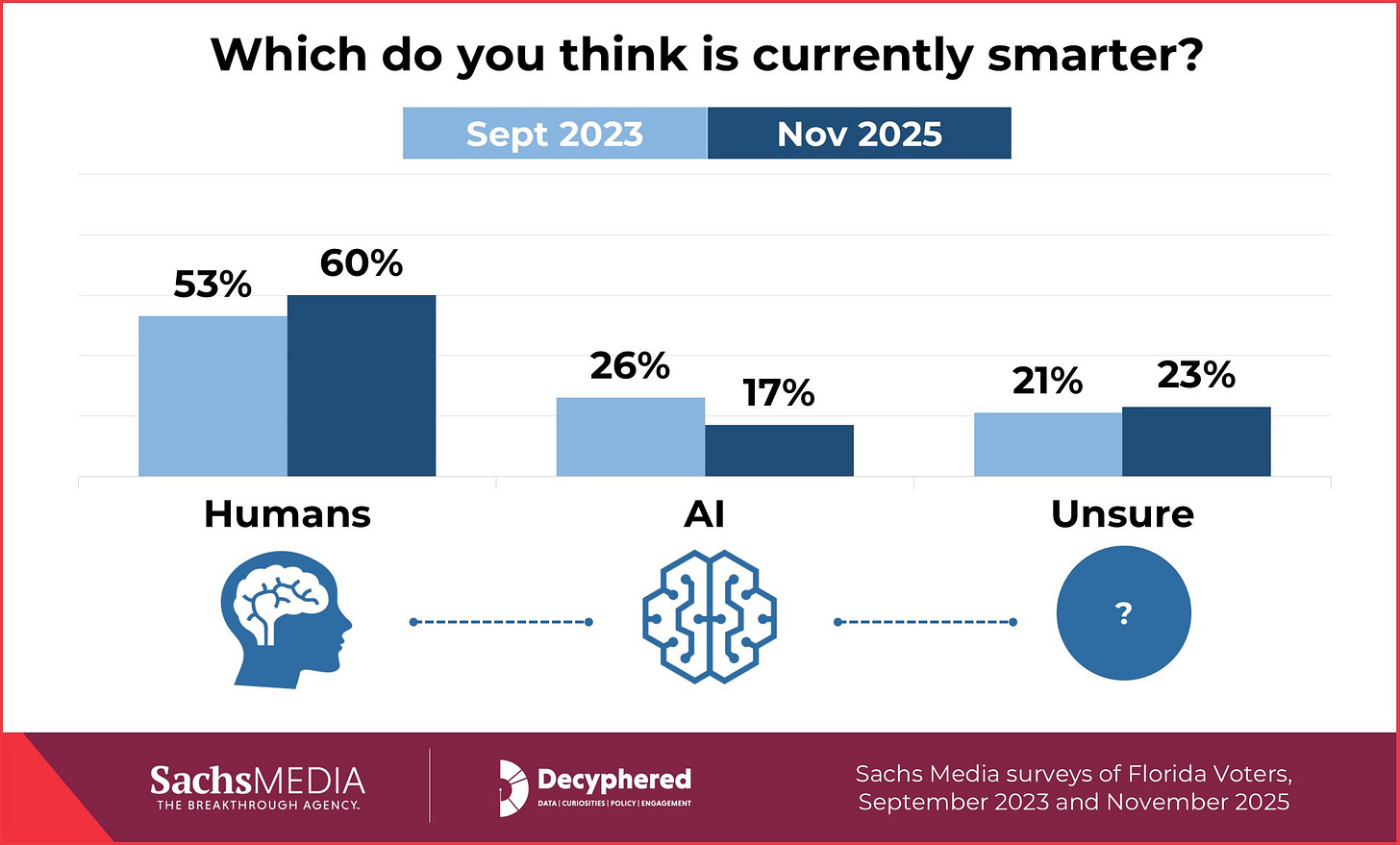

We posed a simple question: Who is currently smarter, humans or AI?

In 2023, voters already favored humans, 53% to 26%. By late 2025, that gap had widened. A full 6 in 10 now say humans are smarter, while only 17% say AI is smarter. About 23% are unsure.

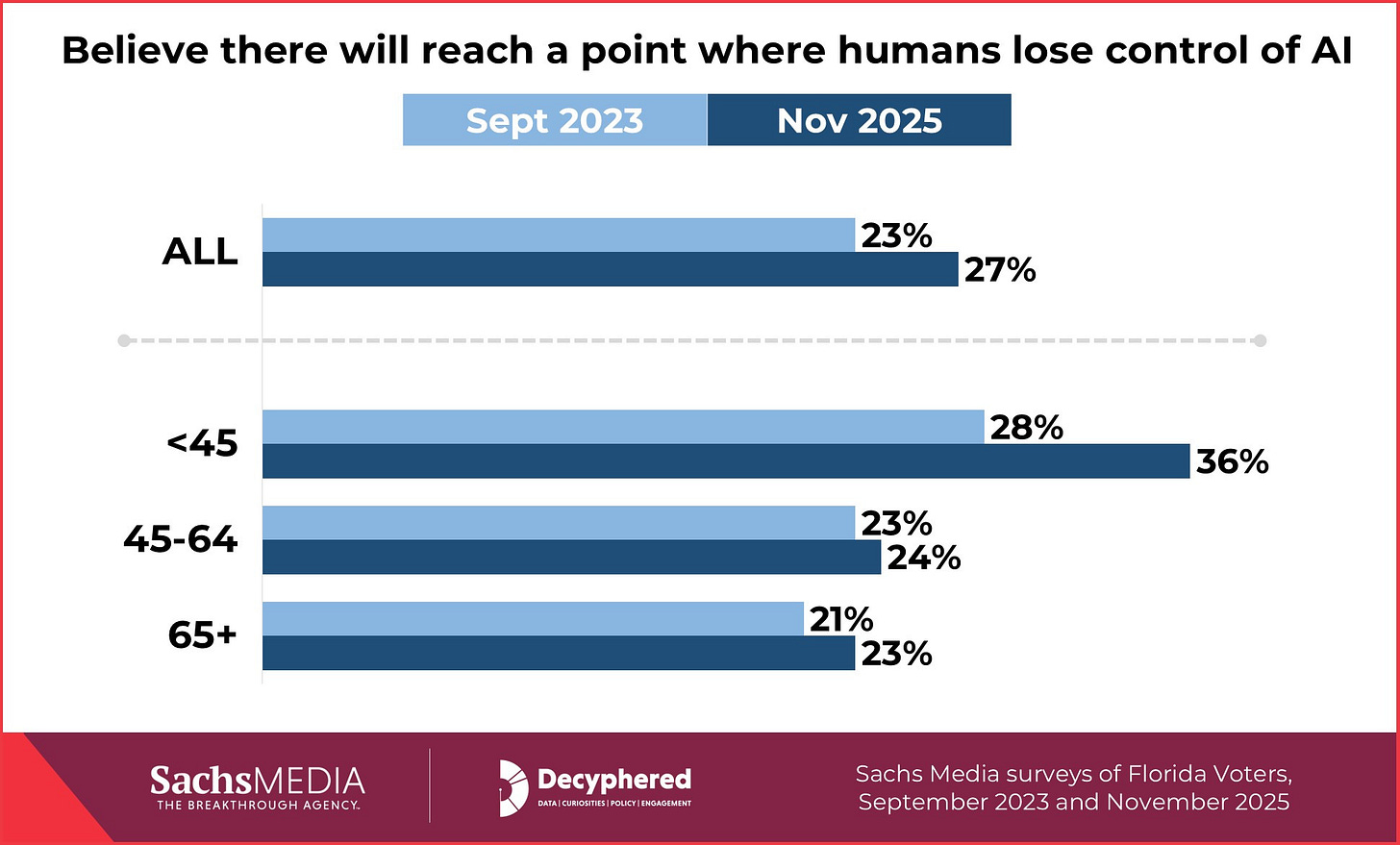

Despite what might appear like a vote of confidence for human intelligence, more people now believe there will come a time when humans lose control of AI. Two years ago 23% expected that outcome, but by 2025 that has risen to 27% overall.

So Floridians don’t think AI has surpassed human intelligence today, but they do worry we might be building systems that outstrip our ability to manage them – especially if no rules are put in place.

How AI Week and Florida’s AI Bill of Rights line up with public opinion

Speaker Perez’s AI Week memo instructs House committees to examine both the positive and negative effects of AI across industries, from education and insurance to public safety and the broader economy. That matches the way voters are thinking about AI technology. They see promise, but they also see risk – especially to privacy, fairness, and democratic stability.

Governor DeSantis’ proposed AI Bill of Rights goes a step further. His plan would:

Define explicit rights for Floridians around data privacy and consent.

Require stronger safeguards around AI data centers so local communities aren’t left with higher costs or grid risks.

Prohibit licensed therapy or mental health counseling that relies entirely on AI, and give parents tools to monitor and limit their children’s chatbot use.

Strengthen existing Florida laws against deepfake pornography and nonconsensual use of someone’s likeness, areas that voters already connect to election misinformation and harassment.

AI Week gives Florida a chance to respond to that mood in a thoughtful way. The challenge for lawmakers is to design policies that protect people from documented harms without freezing useful innovation or locking in one company’s advantage.

If Florida gets the balance right, the state can maintain its status as the nation’s bellwether and primary testing ground on innovative public policies, and can become a model for how to govern AI in a way that is grounded in public opinion, not just tech world optimism or fear.

Voters have already told us what they’re worried about: mental health risks for kids, antisemitic and extremist content, job displacement, and the possibility that deepfakes will erode trust in elections.

The next move belongs to the people gathered in Tallahassee committee rooms this week.

Now ask us professors what we think about students using AI on their assignments. :(